Coded Distributed Data Shuffling

1

Sponsors:

Problem Statement and Motivation

-

Data shuffling of training data among different computing nodes (workers) has been identified as a core element to improve the statistical performance of modern large scale machine learning algorithms. Data shuffling is often considered as one of the most significant bottlenecks in such systems due to the heavy communication load.

-

Under a master-worker architecture (where a master has access to the entire dataset and only communication between the master and the workers is allowed) coding has been recently proved to considerably reduce the communication load from the master to the workers.

-

In this work, we consider a different communication paradigm referred to as decentralized data shuffling, where workers are allowed to communicate with one another via a shared link.

Technical Approach

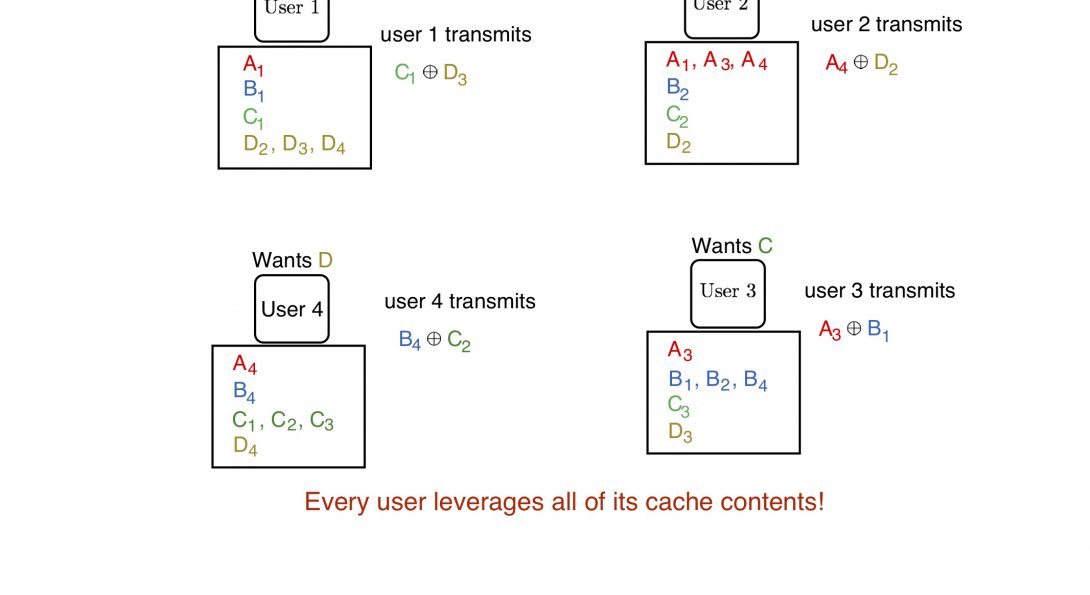

- The decentralized data shuffling problem has two phases: workers communicate with each other during the data shuffling phase, and then workers update their stored content during the storage phase. The goal to is design a shuffling code that requires the minimum possible data exchange among workers, regardless of the shuffle.

Key Achievements and Future Goals

- For the case of uncoded storage (i.e., each worker directly stores a subset of bits of the dataset), we propose converse and achievable bounds that are to within a factor of 3/2 of one another.

- As a by-product, a novel distributed clique-covering scheme is proposed for distributed broadcast with side information—a setting that includes as special cases decentralized data shuffling, distributed index coding, and device-to-device coded caching.

- Results presented at:

- Kai Wan, Daniela Tuninetti, Mingyue Ji, and Pablo Piantanida “Fundamental Limits of Distributed Data Shuffling,” Proceedings of the Fifty-sixth Annual Allerton Conference on Communication, Control and Computing (Allerton 2018), pp. 662-669. Monticello, IL USA, October 2018. DOI:0.1109/ALLERTON.2018.8635882.

-

Kai Wan, Daniela Tuninetti, Mingyue Ji, Giuseppe Caire, and Pablo Piantanida, “Fundamental Limits of Decentralized Data Shuffling.” Preprint available at arXiv:1807.00056. Submitted to IEEE Transactions on Information Theory in March 2019. T-IT-19-0199.